Building a SWE Experimentation Platform

Gain the skills GenAI benefits from, but job opportunities may not provide

My "Karl Marx Would Buy GPUs" article concluded how, with ever-concentrating corporate and personal wealth, GenAI vendors will push that disparity further. Our finances whether as employee, solo entrepreneur, or small-company founder, are facing similar forces. The choices on strategy to overcome the slow squeeze boil down to combinations of:

Find a way to be more capable than the big GenAI vendors and identify opportunities to beat them at some piece of their own game. Challenging, but not impossible as big companies aren’t as nimble as individuals or small companies. Expect to change direction rapidly as LLM capabilities evolve.

Find something your market wants that is grounded in novelty or uniqueness or deep insight, or at least with a provenance that communicates value. If you were part of the reason for the transaction, that edge can’t be instantly prompted away.

Next: Phase 1: Just a Bunch of Compute

Skill Building

My own focus is the world of software engineering so I’ve been trying to navigate a path that is about 5% of the first option, and 95% of the second. A day may come when technical domain expertise ceases to be a thing, but I believe we’re a long way from that. The strongest reason I have was explored in On the Turn of a Phrase:

We cannot think thoughts for which we lack the language.

Within software engineering — or frankly any deep-knowledge career path — that suggests a couple of observations:

If you aren’t invested in the GenAI world then you need a deep understanding of the detailed workings of a domain. You’re competing against an ever-changing LLM knowledgebase. Surface knowledge won’t cut it.

If you are invested in the GenAI world then your ability to generate quality outcomes improves greatly if you have deep knowledge of a domain. Surface knowledge has too little selective power in prompts to generate the strongest results out of an infinite universe of choices. You need to know what to ask for.

Either way you land in a similar place as a SWE. Deep skill has moved from being an eventual nice-to-have to now being a key factor in your relevance.

You can’t just read a book for deep skill:

You have to be in a position to actively experiment as you drill down.

We learn from a mix of positive and negative experiences.

These shape both what connects in our memory and the boundary on what is appropriate to generalize.

Those of us who have already been in software engineering (or adjacent field) for a couple of decades already had opportunities to do all that. We’ve built layers of foundation skills. I’m concerned that more recent graduates, junior, and even senior engineers are going to find themselves denied a similar learning process.

The Strategy

To help fill the gap I’m rolling out a series of articles on the approach I have used myself for many years. I’m in the midst of updating it for life in an LLM world.

The idea is simple. Nothing teaches you how things work, like having to actually make them work by yourself. The effort to create comprehensive infrastructure from scratch will teach you far more than a hundred LeetCode exercises.

If you follow along and attempt any of this, in essence you’ll be building the scaffolding for your own startup. Whether you have a great business idea or not, at a minimum you’ll be faced with how pre-seed tech startups start to stitch themselves together and you’ll see why they have so many rough edges. There is only so much time, only so much money, only so much energy, and you learn that the only way you say “yes” to important tasks is by saying “no” or “not yet” to unimportant ones.

Even if you aren’t in an early phase of your career perhaps you’ve experienced what many of us do: an industry of ever-broadening technology choices we often bounce between at increasingly shallow levels of understanding. If you haven’t built a proper experimental playground before, this may increase your motivation so that you can dig into all the high-leverage details that the day job may not enable.

There is another theme to this. Cloud compute and GenAI vendor APIs have something in common. Both have ways they tax you for experimentation, and can punish you badly for expensive mistakes in establishing budget guardrails.

The Experimentalist Laboratory

The approach here is to mostly run on hardware you’ve purchased for yourself. Obviously many things can be moved to the cloud, and some of them should, but there is nothing quite like the freedom to make any purchase choice that makes sense to you, apply any configuration that helps you, and throw away anything you later discover does not server you.

Technology should be about YOU, and not the other way around. The process here will very much be one of making, and revising as necessary, decisions that place YOU at the center of what happens, and keeps YOU as the primary decider of pretty much everything.

A side benefit is that, other than the initial hardware purchases, you aren’t constantly feeding mega-corps more money just to have permission to learn and push the envelope. Stop acting like their favorite flavor of catnip. They have an ample supply.

The essence of building your own laboratory for experimentation is straightforward:

The economics of the platform should be approachable.

Hardware, software, and some external services will be involved.

It likely takes more than a laptop; you’ll want something that surfaces real challenges and provides the growth potential of actual distributed systems.

There will be many moving parts, so tackle the assembly in bite-sized pieces.

Optionally include LLMs. If you do, the same hardware you use for other experiments can be used for self-hosting some LLMs.

Including LLMs opens up more avenues for learning and — if this happens to be your goal — you were never going to avoid self-hosting experiments with the strategy “beat GenAI vendors at their own game” as your objective.

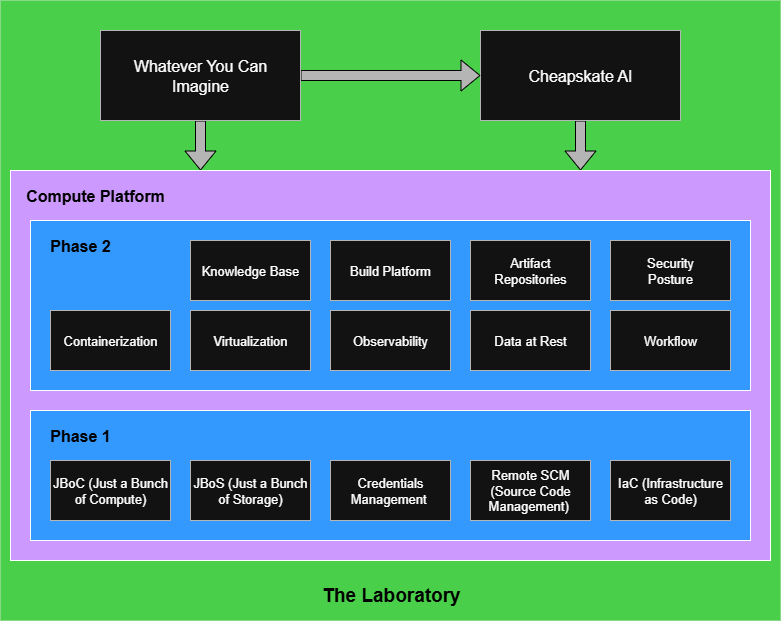

Assembling the laboratory is broken into two major sections and an optional third.

Phase 1: the basic foundation of hardware plus minimal software tooling for rolling out system configuration and application installation. It also covers ensuring that your work gets protected somewhere remote.

Phase 2: an ever-growing collection of technology artifacts or progress on procedural efforts so that, in time, you can build pretty much anything you wish.

Cheapskate AI: optionally, run LLM tooling locally to experiment with, tune, and control the behavior of the AI tools you use. The tools should behave as you wish, not as an outside vendor decides for you.

The to-do list can be as long as your interests drive it, but for those earlier in their career you should come out of this with a gut-level understanding of what drives many decisions at the intersection of technology and business. That awareness can become one of your selling points in a career role or a new business.

In future articles I’ll start breaking down the laboratory assembly in detail.

The Experimentalist : Building a SWE Experimentation Platform © 2025 by Reid M. Pinchback is licensed under CC BY-SA 4.0