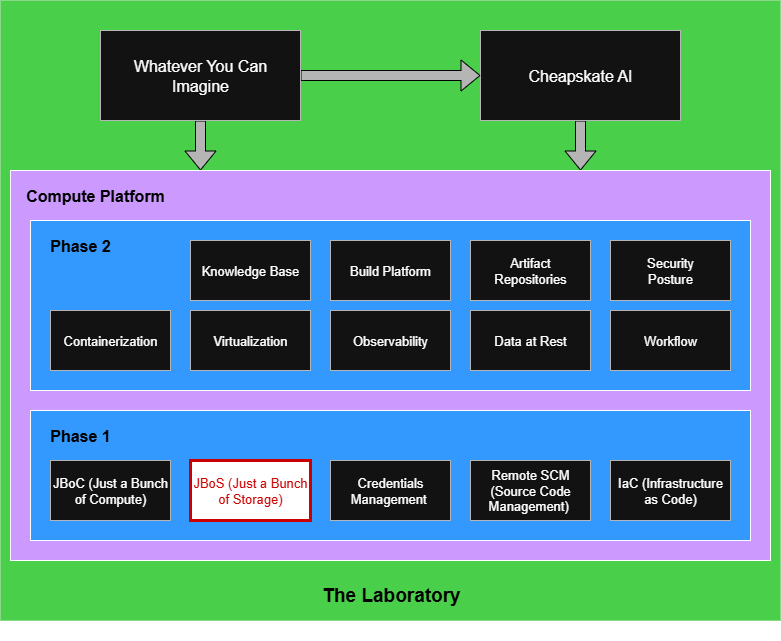

Phase 1: Just a Bunch of Storage

Experimentation laboratories need operational and data store capacity

Planning for storage in your newly-constructed lab has several elements to it:

Hardware considerations.

Filesystem considerations.

Use-case considerations.

Prev: Phase 1: Just a Bunch of Compute | Next: Phase 1: Credentials Management and Bootstrapping

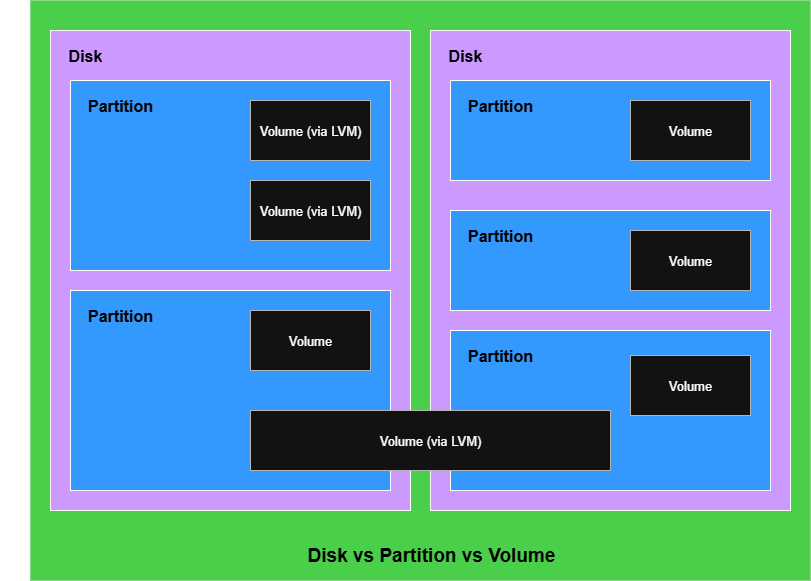

First a little terminology:

Drive: a physical device you buy and mount in or attach to a computing node.

Partition: a subdivision of the space available on the drive. There are two partitioning approaches, with GUID Partition Table (GPT) being what you’ll usually see, but Master Boot Record (MBR) partitions may exist on older systems. When picking compute nodes to buy, I would suggest not getting anything so old it doesn’t support UEFI, and any UEFI system should allow GPT. GPT gives you more flexibility on partition creation and supports larger drives.

Volume: a logical construct that presents available storage space to the operating system. Historically a volume usually fit within a partition, but Logical Volume Management (LVM) can present a volume that spans partitions and drives. It does this in terms of “extents,” which it uses to associate physical to logical storage.

There is some hand-waving simplification in those explanations as partitions can be primary, extended, or logical, but those distinctions apply to using MBR.

Local Physical Storage Use Cases

Each compute node will have storage needs to account for. For simplicity I’ll assume a Linux variant is your goal.

EFI System Partition (ESP): This provides space for boot loaders and kernel images. I plan a 1/4gb for this, but if you knew you intended to do a lot of experimenting with different kernels you could make it bigger to house more kernel images.

Swap Partition: You may read online content or hear discussions that advocate against swap due to performance concerns. As a database guy there are reasons why I disagree with the position. You don’t have to allocate a physical partition as you can add swap files later. I prefer to allocate the partition and size it according to the amount of RAM I installed on the compute node. You could use a smaller amount, but the less you allocate the more you need monitoring on swap activity, which is a discussion for future articles. Obviously if you have more than 64gb of RAM, you won’t want to size swap as the entire memory footprint unless you have a strong reason to support a virtual memory space that large.

Root Partition: This will contain the volume that the boot loader establishes as your running root filesystem. When you size this allow for the fact that package updates, run-time log files, applications, and the runtime needs of those applications will all require storage.

Other Linux Partitions: While not required, you may decide that for system stability you want some of the application, app data, and logging activity go to a separate physical partition. That would ensure the O/S itself doesn’t fall over due to the root partition filling up. The likely mount points for a volume would be /var or /opt. If you want both in a common partition, LVM can achieve that.

Maintenance Partition: Depending on the BIOS features, I’ve found it handy to add a small additional partition to write to. Sometimes when working in the BIOS you can record configuration snapshot images to remember what you changed. This relates to the “Make notes and take pictures“ advice from the previous article. When you set up many nodes, sometimes one or two will not behave consistently with the others, and knowing your BIOS setup without having to boot into it can be useful. Maybe give it 1-2 gig if your BIOS saves config snapshots as actual image-formatted (e.g. BMP, PNG) files. You may find that this needs to be formatted as FAT32, which will be reported by “fsdisk -f” on a UEFI/GPT setup as “Microsoft basic data.”

Data Partitions: If you know you’ll be working with large volumes of data, it is worth planning data partitions. By having separate data partitions you protect the O/S from data filling up the root filesystem. It’s also the usage pattern that may later motivate you to add additional or replace with larger drives. It’s less disruptive to do that with clearly-segregated data than it usually is for a large filesystem muddling everything together.

An obvious question is whether all these partitions should be on the same drive or across multiple drives. This mostly relates to the root filesystem versus everything else, although for database purists there are other scenarios.

The most direct reason for multiple drives is protecting the ability to boot from the root filesystem. There can be performance benefits to multiple drives, although it is easy to overstate the potential for non-RAID performance parallelism unless you are spec’ing out compute nodes with 2 or more CPUs, 2 or more RAID cards, and NUMA memory configuration to channel memory transfers separately. Write traffic across multiple SSDs may hold up better as a performance story without that level of hardware due to how IOPS could get batch flushed to on-SSD SRAM cache.

In the case of some database architectures there are fault-tolerance scenarios around directing Write-Ahead Log (WAL) to separate disks. Again this story holds up better when you have multiple RAID cards, preferably cards with battery backup for ensuring completed flushes on a power outage. The goal here would be to approximate the theoretical concept “stable storage,” which forms the backbone for how we reason about database transactions and database recovery.

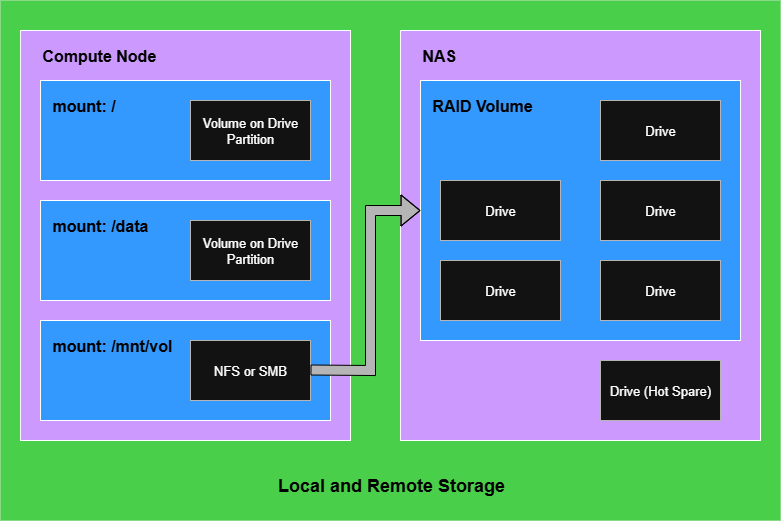

Remote Storage Use Cases

It is important to keep in mind that you’re creating an experimental lab platform. You should be able to set up and tear down compute nodes at will, or alter some aspect of their current application provisioning and configuration.

This will push you towards having externalized storage so that any data you care about will live across those major system alterations.

You are also going to run into cases where you want the same data available across multiple compute nodes. Having multiple nodes mount the same remote volume is useful for allowing tasks to run anywhere and be matched up to the data required.

The straightforward solution to remote storage is Network Attached Storage (NAS). I want to dig into NAS solutions more in later articles, but for now the outline is:

You can either build your own, or buy a NAS. If you’re thinking about lab creation like a small startup business, you probably want to just buy something so you can focus your time elsewhere. Pick a reliable, established vendor and product line.

You want your NAS to support the protocols you intend to use for file sharing. The two most likely are Server Message Block (SMB) and Network File System (NFS). There are others, but those are what you’ll typically use. Use the most recent stable version of a protocol available.

The bulk of the storage should be in a RAID configuration that helps preserve your data. I prefer RAID 6 plus a hot spare, because I’m willing to trade the cost of some storage for increased protection against disk failure. Investigate RAID options and pick the configuration that suits your situation.

There are distributed filesystem technologies like Ceph, but as the discussion here is about “phase 1” planning for the lab platform, I don’t think that worth getting into. It is family of options can be pretty heavy-weight as a learning curve and maintenance responsibility. You may only want to head down that path if one of your goals from your cluster was hands-on experience with distributed filesystems.

Economic Considerations

You have three possible optimization outcomes:

Low cost.

High reliability.

High performance.

You can only pick at most 2 out of the 3 if you push to the extremes, but sometimes you can balance a little in the middle. When it comes to storage, no matter the use case, I see absolutely no win in giving up on high reliability. You’re trying to accomplish things, and having storage fail underneath you does not accomplish things.

That leaves you to decide when to aim for low cost, versus when to emphasize high performance.

I lean towards the following:

The NAS box itself should be capable of high performance. You already have the handicap of data traveling over the network. Just get a good box in the first place.

The drives in the NAS are where you have have more flexibility. If you want a lot of storage, you really can’t beat the price point of most good-quality HDDs. If you prefer fast storage then you’ll want to get good-quality SSDs. Your bill and final storage capacity will differ between those choices, just don’t buy junk. This does not mean that you must buy drives marketed as “enterprise grade.” The price points on most of those are stupid. If you have a decent RAID setup, the entire point was to allow RAID to be your source of resilience. While I’m personally not a user of hybrid drives, hybrids could be a reasonable option for a NAS.

For local storage your choice is between HDD and SSD. I use one of each in my compute nodes. The HDD is used for the basic O/S and for the activity that experiences the most churn like swap and logging traffic. The SSD is used for application data. This setup protects the operating system itself from the risk of lockup due to NAND burn on the SSD, or SSDs failing due to bad firmware (several SSD vendors have had issues). My SSD selection criteria is definitely performance and reliability. HDD purchases have emphasized quality, and sometimes you can luck out with finding unused batches of older product lines where you hit a nice sweet spot that balances good quality, very decent (but not best-of-breed) performance, and fair price.

We won’t get into the meat of disaster recovery during phase 1 — there isn’t any intellectual property to protect yet — but for initial economic planning it is worth considering if you want a spare drive of each kind you use. Both my NAS and the compute nodes use the same model of SSD so I keep one idle SSD in the NAS as a hot spare for a RAID rebuild, but in a pinch I could swap that into a compute node for a failed data drive. I also have a couple of spares of the HDD models used in the compute nodes in case a root volume fails. You’re trying to run a business, and downtime means impaired operations. When you are bootstrapping your lab as a side project, parts delays interleaved with life scheduling may interrupt your plans for weeks.

The Experimentalist : Phase 1: Just a Bunch of Storage © 2025 by Reid M. Pinchback is licensed under CC BY-SA 4.0